The Ultimate Guide to LangChain, LLMs, and Their Applications

The rapid advancement of artificial intelligence (AI) has revolutionized how we interact with technology, and at the core of this transformation lie powerful frameworks like LangChain and cutting-edge technologies such as Language Models (LLMs). LangChain serves as a game-changer by simplifying the process of building robust AI applications, while LLMs enable these applications to exhibit human-like understanding and reasoning. Together, they empower developers to create solutions that are both scalable and intelligent.

Language Models (LLMs) have redefined AI capabilities, making it possible for machines to process, analyze, and generate human language with remarkable accuracy. From powering chatbots to enhancing search engines, LLMs have unlocked a myriad of opportunities in natural language processing (NLP) and beyond.

This article will provide a deep dive into the world of LangChain and LLMs. By the end of this guide, you will understand what LangChain is, how LLMs work, and how these technologies come together to create groundbreaking applications. We’ll also explore key components like chains, agents, and memory, with practical examples to solidify your understanding.

Understanding LangChain

LangChain is an open-source framework designed to simplify the process of building applications powered by Language Models (LLMs). It abstracts the complexities of interacting with LLMs and allows developers to focus on creating innovative solutions. At its core, LangChain provides a structured way to connect language models with various data sources, workflows, and real-world use cases.

Key Features of LangChain

- Modularity: Easily customizable components for flexibility.

- Integration: Seamless connections with tools like Hugging Face, OpenAI API, and databases.

- Support for Chains: Build workflows where multiple steps are executed sequentially or in parallel.

- Memory: Maintain context across user interactions, enhancing conversational AI systems.

Use Cases of LangChain

- Chatbots and Virtual Assistants: Enhance customer interactions by creating intelligent conversational agents.

- Document Summarization: Simplify large text data into concise summaries.

- Question-Answering Systems: Provide precise answers to user queries by integrating with LLMs and databases.

- Data Augmentation for AI Models: Generate synthetic data for model training or testing.

Why LangChain Matters?

LangChain is critical for developers who want to unlock the full potential of LLMs while overcoming the challenges of managing complex AI workflows. Without LangChain, developers often have to write extensive boilerplate code to connect LLMs with APIs, databases, and other tools. LangChain simplifies this by providing pre-built modules and chains, enabling rapid prototyping and scaling.

Benefits of LangChain

- Efficiency: Reduces development time by abstracting repetitive tasks.

- Scalability: Supports dynamic workflows for real-world applications.

- Focus: Let developers concentrate on solving problems rather than managing infrastructure.

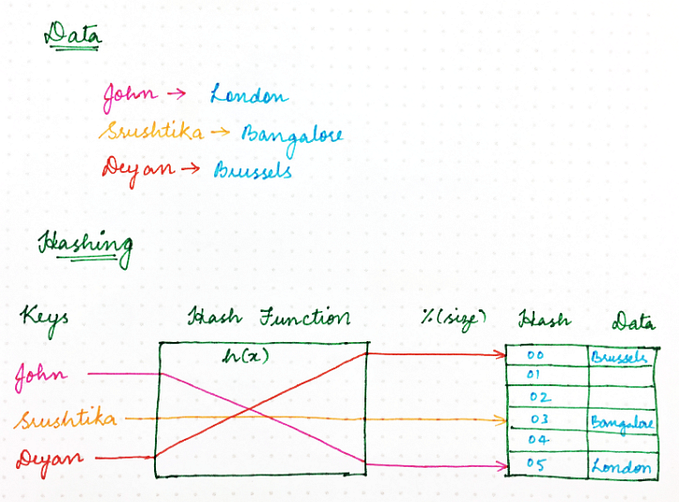

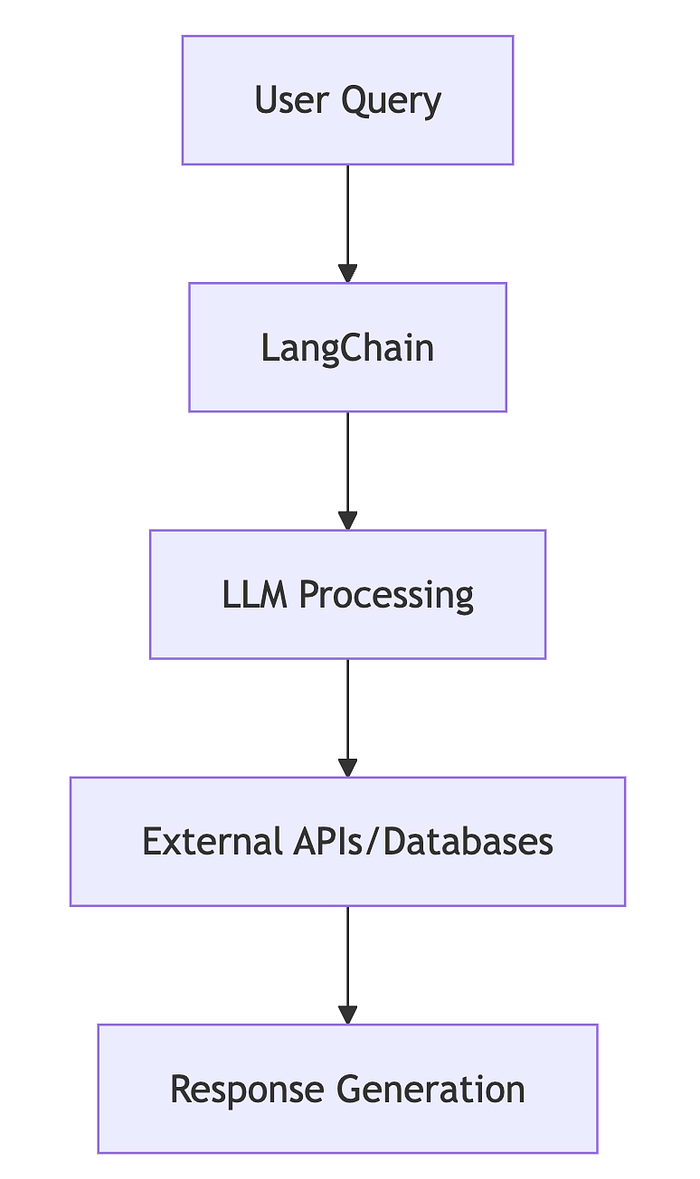

Below is a simple flow diagram that illustrates how LangChain connects LLMs with external data sources and workflows:

Language Models (LLMs)

Language Models (LLMs) are advanced AI systems designed to understand and generate human-like language. These models are trained on massive datasets comprising text from books, articles, websites, and more. By leveraging this training, LLMs can perform tasks like translation, summarization, question-answering, and even creative writing.

Examples of Popular LLMs

- GPT-4: Developed by OpenAI, known for its conversational capabilities and reasoning.

- BERT (Bidirectional Encoder Representations from Transformers): A transformer-based model by Google optimized for NLP tasks.

- Claude: An AI assistant by Anthropic, designed for safe and ethical AI interactions.

Capabilities of LLMs

- Natural Language Understanding (NLU): Analyze and extract meaning from user input.

- Natural Language Generation (NLG): Create coherent and contextually relevant text.

- Reasoning: Perform logical deductions and complex problem-solving.

Below is a Python snippet demonstrating how an LLM can generate text using the OpenAI API:

import openai

# Set up your OpenAI API key

openai.api_key = "your-api-key"

# Prompt for the LLM

prompt = "Explain the benefits of using LangChain with LLMs."

# Generate a response

response = openai.Completion.create(

engine="text-davinci-003",

prompt=prompt,

max_tokens=100

)

print(response["choices"][0]["text"].strip())Challenges in LLMs

While LLMs have remarkable capabilities, they come with challenges:

- Data Bias: LLMs may generate biased outputs due to inherent biases in training data.

- Interpretability: Understanding why a model generates specific responses can be difficult.

- Computational Overhead: Training and running LLMs require significant computational resources.

Mitigating these challenges often involves fine-tuning models, implementing robust evaluation metrics, and leveraging frameworks like LangChain to streamline workflows.

Exploring Free LLMs

Open-source LLMs are transforming the AI landscape by providing developers with powerful tools without the high costs associated with proprietary systems. These models are often developed and maintained by a community of researchers and developers, making them accessible and highly customizable.

Hugging Face Transformers

Hugging Face Transformers is a popular open-source library that provides pre-trained models for a wide range of natural language processing (NLP) tasks. It simplifies the integration of LLMs into applications through its user-friendly API and extensive model repository.

Key features:

- Access to thousands of pre-trained models (e.g., BERT, GPT-2, T5).

- Multi-task support: Translation, summarization, question answering, etc.

- Customization: Fine-tune models on specific datasets.

Example:

from transformers import pipeline

# Load a pre-trained Hugging Face model

summarizer = pipeline("summarization")

# Use the model

text = "LangChain provides an easy way to integrate LLMs with real-world applications."

summary = summarizer(text, max_length=20, min_length=5, do_sample=False)

print(summary[0]['summary_text'])Other Free/Open LLMs

- GPT-J: A powerful open-source alternative to GPT-3, designed for text generation tasks.

- BLOOM: A multilingual LLM designed for diverse NLP tasks.

- Dolly: Open-source LLMs fine-tuned on instruction datasets.

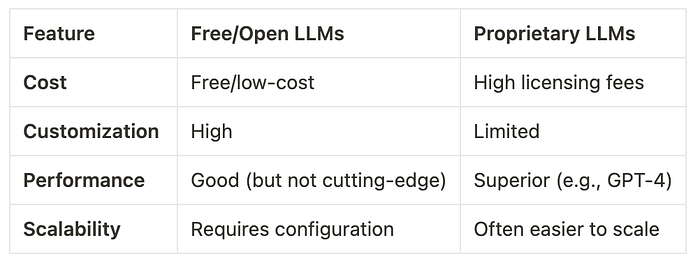

How Free LLMs Compare to Proprietary Options

Open-source LLMs are ideal for cost-sensitive projects but may lag in performance compared to proprietary systems like OpenAI’s GPT-4 or Google’s PaLM.

Practical Use Cases for these LLMs

- Chatbots: Build cost-efficient conversational agents.

- Content Generation: Automate blog writing, summarization, and creative content.

- Education: Use for e-learning applications and language tutoring.

- Custom NLP Tasks: Fine-tune for domain-specific tasks like legal document analysis.

Understanding Chains in LangChain

Chains are a core concept in LangChain, enabling developers to structure workflows involving LLMs. A chain consists of multiple steps where outputs from one step serve as inputs to the next.

Purpose of Chains

- Modularize AI workflows.

- Simplify multi-step processes like data retrieval and response generation.

- Enhance the reusability of components.

Types of Chains

- Simple Chains: Execute a single task like generating text from a prompt.

- Sequential Chains: Combine multiple steps where outputs are passed sequentially.

- Parallel Chains: Execute tasks concurrently and aggregate results.

Example of a simple chain:

from langchain.chains import SimpleChain

# Define the steps

step1 = lambda input: f"What are the benefits of {input}?"

step2 = lambda input: f"The benefits of {input} include scalability and efficiency."

# Create and run the chain

chain = SimpleChain(steps=[step1, step2])

output = chain.run("LangChain")

print(output)Sequential Chains

Sequential chains in LangChain are designed for workflows that require multiple dependent steps. Each step in a sequential chain takes the output of the previous step as its input.

Benefits of Sequential Chains

- Streamlined Execution: Automates complex workflows.

- Flexibility: Easily customizable for various use cases.

- Context Management: Passes information across steps seamlessly.

Use Cases of Sequential Chains

Multi-Step Q&A Systems

- Step 1: Retrieve relevant documents from a database.

- Step 2: Use an LLM to generate a concise answer based on the retrieved documents.

Content Generation Pipelines

- Step 1: Generate an outline for an article.

- Step 2: Expand each section into detailed paragraphs.

- Step 3: Summarize the article into a short brief.

Example:

from langchain.chains import SequentialChain

# Define steps

def step1(input):

return f"Generate an outline for: {input}"

def step2(input):

return f"Expand the outline: {input}"

# Create a sequential chain

chain = SequentialChain(steps=[step1, step2])

result = chain.run("an article on LangChain")

print(result)Agents in LangChain

Agents in LangChain are specialized entities designed to perform decision-making and task execution within an AI workflow. These agents act as intermediaries that analyze user inputs, interact with language models, and decide which tools or actions to invoke. Their adaptability makes them ideal for handling dynamic, complex, or multi-step processes.

How do Agents work with LLMs?

Agents utilize LLMs to interpret natural language instructions and process contextual information. They rely on the LLM’s ability to analyze queries and execute tasks in real time. By combining LLMs’ natural language understanding with agents’ dynamic workflows, developers can create applications that intelligently handle varying user requirements.

For example:

- An agent might interpret a user query like, “Book a flight for next Friday to New York and send me hotel recommendations,” and dynamically call APIs for flight bookings and hotel suggestions.

Examples of Agent-Driven Applications

- Personal Assistants: Schedule meetings, draft emails, or set reminders based on user preferences.

- AI Automation: Simplify customer support through ticket classification and issue resolution.

- Interactive Applications: Enable adaptive learning tools, intelligent simulations, or gaming decision systems.

Memory in LangChain

Memory in LangChain is a feature that allows applications to maintain context across multiple interactions. This ensures that AI systems, like chatbots or virtual assistants, provide continuity in conversations or tasks, enabling more personalized and meaningful user experiences.

Types of Memory

- Short-Term Memory: Retains contextual information for the duration of a session. This is useful for tasks that require limited, immediate context.

- Long-Term Memory: Stores information persistently, enabling applications to access data across multiple sessions. Ideal for personalizing user experiences or maintaining history for in-depth tasks.

Practical Applications of Memory

- Conversational AI: Tailor responses based on prior user interactions.

- Customer Support Systems: Remember previous queries to provide consistent support.

- LLM-Based Workflows: Maintain coherence in lengthy, multi-turn tasks like document creation or coding assistance.

For instance, in a customer support chatbot, memory allows the system to recall the user’s complaint and suggest follow-up solutions even after several exchanges.

Tools and Libraries That Complement LangChain

LangChain’s functionality can be greatly extended by integrating it with other tools and libraries. Below are some popular choices:

Hugging Face

- Provides a vast repository of pre-trained language models, making it easy to deploy specific LLMs for unique tasks.

- Example: Fine-tuning a transformer for summarizing customer feedback.

OpenAI API

- Offers advanced models like GPT-4, ideal for applications like text generation, semantic search, and chatbot development.

- Example: Using OpenAI’s API in LangChain to automate business reports.

PyTorch

- A leading machine learning library used for developing custom AI models and training pipelines.

- Example: Training domain-specific models that can seamlessly integrate with LangChain workflows.

By combining LangChain with these tools, developers can enhance the performance, versatility, and scope of their AI-powered applications, ensuring robust solutions tailored to their specific needs.

Best Practices for Working with LangChain and LLMs

Design Efficient Workflows

- Start with Clear Objectives: Define specific goals for your AI workflows, whether it’s automating customer support or extracting insights from documents.

- Modular Approach: Break workflows into smaller, manageable components (e.g., chains or agents). This improves flexibility and simplifies debugging.

- Iterative Development: Test and refine individual modules before integrating them into the larger system.

Optimize LLM Usage

- Model Selection: Choose an LLM based on your use case. For instance, smaller models like GPT-3.5 are cost-effective for simpler tasks, while GPT-4 is better for complex ones.

- Token Management: Reduce unnecessary prompts and responses by carefully designing input/output templates. This helps cut costs and improve response times.

- Batch Processing: When handling large-scale data, process tasks in batches to optimize throughput and avoid latency.

Avoid Common Pitfalls

- Overloading Agents: Avoid using agents for tasks that can be handled by simpler chains. Overreliance on agents can slow down workflows.

- Ignoring Context Management: Ensure proper use of memory to maintain coherence in multi-turn conversations or tasks.

- Neglecting Edge Cases: Test workflows thoroughly to handle unexpected inputs or failures gracefully.

Real-World Applications and Case Studies

AI-Driven Customer Support

Companies like Zendesk have integrated LangChain with LLMs to build intelligent support systems.

Use Case Example: A chatbot processes customer complaints, fetches relevant FAQs, and escalates unresolved issues to human agents.

Workflow:

- The input chain interprets user queries.

- Memory stores prior conversation context.

- Output chain retrieves appropriate solutions.

Impact: Reduced response times and increased customer satisfaction.

Document Summarization for Legal Firms

A legal tech company leverages LangChain to summarize lengthy contracts or case files.

Workflow:

- Text pre-processing removes unnecessary sections.

- LLM generates concise summaries.

- Agents verify summaries against key legal terms.

Impact: Saves hours of manual work, enabling lawyers to focus on decision-making.

Future of LangChain and LLMs

Emerging Trends

- AI Personalization: Future AI systems will use LangChain to create highly tailored user experiences, blending memory and LLM capabilities.

- Autonomous Agents: Agents capable of handling multi-task workflows independently will become more common, driving innovation in areas like healthcare and finance.

- Integration with IoT: LangChain could power intelligent decision-making in IoT ecosystems, optimizing energy use or predictive maintenance.

Predictions

- Advanced Contextual Understanding: LLMs will evolve to handle even more nuanced contexts, enabling better performance in real-time applications.

- Wider Accessibility: Open-source tools and models will continue to democratize AI, empowering smaller businesses to leverage LangChain for their needs.

- Interdisciplinary Applications: LangChain will bridge gaps between industries like education, research, and entertainment, creating unified AI ecosystems.

Conclusion

LangChain and LLMs represent the future of intelligent, adaptable AI solutions. By understanding components like chains, agents, and memory, developers can build workflows that are efficient, scalable, and impactful.

Embracing best practices and staying ahead of emerging trends ensures organizations can fully leverage these technologies. Whether automating mundane tasks or solving complex problems, LangChain and LLMs provide limitless opportunities for innovation.

Explore LangChain today, experiment with open-source LLMs, and take the first step toward building smarter, AI-driven solutions!