Vector Databases Simplified: From Fundamentals to Applications

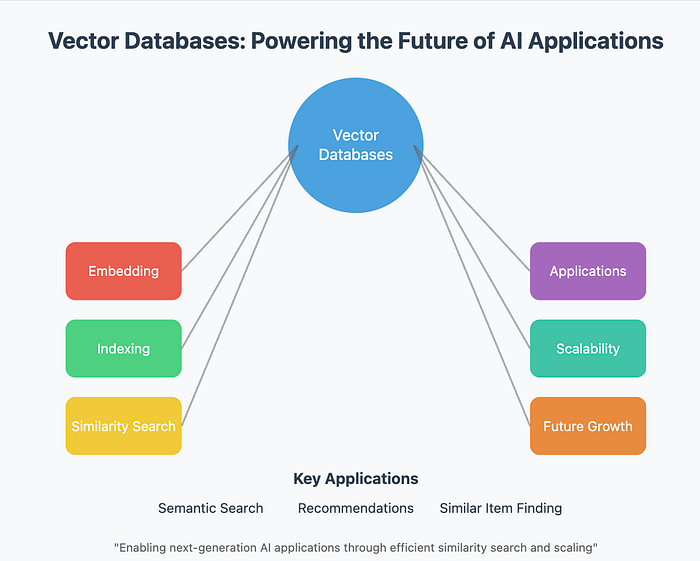

In artificial intelligence and big data, managing and processing high-dimensional data has become crucial. Vector databases are at the forefront of this revolution, serving as indispensable tools for applications like semantic search, recommendation systems, and image recognition. This article builds on our previous discussions about modern data systems and AI integrations, focusing on the mechanics and benefits of vector databases.

Why is this topic relevant? With the surge of AI-driven applications, businesses face the challenge of managing embeddings — dense, high-dimensional numerical representations of data. Traditional databases fail to handle such complex structures efficiently. Enter vector databases: optimized for storing, querying, and retrieving embeddings based on similarity.

What Are Vector Databases?

Vector databases are specialized systems designed to handle, store, and query high-dimensional vector data. Unlike traditional databases that excel at structured, tabular data, vector databases are purpose-built for managing embeddings produced by AI models. These embeddings represent the “essence” of data — whether text, images, or audio — in numerical form.

Key Benefits

- Efficient Embedding Management: AI models generate embeddings for tasks like semantic search or image recognition. Vector databases store and handle these embeddings efficiently.

- Fast Similarity Search: They enable quick comparisons of vectors using advanced similarity metrics, which are vital for real-time applications like personalized recommendations or search engines.

- Scalability: Optimized for large-scale datasets, vector databases can manage millions or even billions of high-dimensional vectors without performance degradation.

Applications

- Semantic Search: Enables context-aware searches. For example, searching for “healthy recipes” retrieves contextually relevant content like vegan or low-carb recipes, even if those terms don’t appear explicitly.

- Recommendation Systems: Platforms like Spotify and Netflix use vector databases to suggest content based on user behaviour and preferences.

- Image and Video Recognition: Stores embeddings derived from images and videos for tasks like facial recognition or object detection.

- NLP: Handles text embeddings generated by models like BERT and GPT for tasks such as translation, sentiment analysis, and chatbot development.

Examples

- Pinecone: A cloud-native vector database built specifically for machine learning.

- Weaviate: An open-source vector database with a focus on semantic search.

- Milvus: Another open-source option optimized for large-scale vector data.

How Vector Databases Work

Overview of the Process

- Data Input: Vectors (embeddings) are generated using pre-trained AI models and stored in the database.

Example of generating embeddings using Python:

from sentence_transformers import SentenceTransformer

# Load a pre-trained model

model = SentenceTransformer('all-MiniLM-L6-v2')

# Example data

documents = ["Artificial Intelligence", "Machine Learning", "Deep Learning"]

# Generate embeddings

embeddings = model.encode(documents)

print(embeddings)2. Indexing: Indexing organizes the data to optimize search operations. Vector databases often use algorithms like HNSW (Hierarchical Navigable Small World) or PQ (Product Quantization) to speed up similarity searches.

3. Query Execution: When a user queries the database, it converts the input into an embedding (query vector). For example:

query = "Neural Networks"

query_vector = model.encode([query])4. Similarity Search: Retrieves vectors closest to the query vector using metrics such as:

- Cosine Similarity: Measures the angle between two vectors.

- Euclidean Distance: Measures the straight-line distance between two vectors.

Code Example:

import numpy as np

from pinecone import Pinecone, ServerlessSpec

from sentence_transformers import SentenceTransformer

class VectorDatabaseExample:

def __init__(self, api_key: str, environment: str):

"""Initialize the vector database connection and embedding model."""

# Initialize Pinecone client

self.pc = Pinecone(api_key=api_key)

# Initialize the embedding model

self.model = SentenceTransformer('all-MiniLM-L6-v2')

# Create or connect to an index

self.index_name = "article-search"

if self.index_name not in self.pc.list_indexes().names():

self.pc.create_index(

name=self.index_name,

dimension=384, # Dimension of the embedding model

metric="cosine",

spec=ServerlessSpec(cloud="aws", region="us-west-2")

)

self.index = self.pc.Index(self.index_name)

def store_documents(self, documents: list[str], metadata: list[dict] = None):

"""Convert documents to vectors and store them in the database."""

# Generate embeddings for all documents

embeddings = self.model.encode(documents)

# Prepare vectors with metadata

vectors = []

for i, embedding in enumerate(embeddings):

vector = {

"id": f"doc_{i}",

"values": embedding.tolist(),

"metadata": metadata[i] if metadata else {}

}

vectors.append(vector)

# Upsert vectors to the index

self.index.upsert(vectors=vectors)

def search_similar(self, query: str, top_k: int = 5):

"""Search for similar documents using a text query."""

# Generate embedding for the query

query_embedding = self.model.encode(query)

# Perform similarity search

results = self.index.query(

vector=query_embedding.tolist(),

top_k=top_k,

include_metadata=True

)

return results

# Example usage

if __name__ == "__main__":

# Initialize the vector database

vdb = VectorDatabaseExample(api_key="your-api-key", environment="your-environment")

# Example documents

documents = [

"Vector databases are essential for modern AI applications",

"Traditional databases cannot efficiently handle similarity search",

"Semantic search helps users find relevant information quickly"

]

# Store documents

vdb.store_documents(documents)

# Search for similar documents

results = vdb.search_similar("How do AI applications use databases?")

print("Similar documents:", results)Importance of Indexing and Optimization

Efficient indexing is crucial for reducing query time, especially in large datasets with billions of vectors. Advanced indexing techniques, such as graph-based HNSW, allow approximate nearest-neighbour searches (ANN), striking a balance between accuracy and speed.

Understanding Neighbor Search

Nearest Neighbor Search (NNS)

Nearest neighbour search (NNS) is the process of finding vectors in a dataset that are closest to a given query vector. This search helps in determining the most similar items (e.g., products, images, texts) to a query in high-dimensional space.

Types of Nearest Neighbor Search

- Approximate Nearest Neighbor Search (ANN):

- In ANN, the goal is to find vectors that are approximately closest to the query vector, with reduced computation time but possibly less accuracy.

- ANN is typically faster but may return less precise results in some cases.

- Applications: Used when speed is prioritized over perfect accuracy, such as in real-time recommendation systems.

2. Exact Nearest Neighbor Search (ENS):

- ENS guarantees to find the exact closest vectors by exhaustively checking all vectors in the dataset.

- Applications: Used when precision is critical, such as in sensitive data matching or legal document searches.

Real-World Applications

- Image Similarity Search:

- Search for images with similar features (e.g., finding products with a similar look on an e-commerce platform).

- Example: Given a picture of a red dress, the system can return other red dresses from a database.

2. Real-Time Recommendation Engines:

- Platforms like Netflix or Spotify use NNS to suggest movies, songs, or products based on user preferences.

- Example: If a user watches action movies, the system may recommend other movies based on the similarity of the content to the user’s history.

What Is a Query Vector?

A query vector is a vector representation of a user’s query or input, which can be compared against a dataset of vectors to find the closest matches. In the context of machine learning, these vectors are typically created using embedding models.

How Embeddings from Models Are Converted into Query Vectors

- Embedding models like BERT, GPT, and Word2Vec are used to convert textual data (like a sentence or word) into numerical vectors.

- The query vector represents the underlying semantic meaning of the input, which is crucial for understanding the context beyond just the words.

Example:

- Query: “Best restaurants near me”

- The embedding model (e.g., BERT) converts this query into a dense vector in high-dimensional space, capturing the meaning of the query.

Role of Query Vectors in Semantic Search and Personalization

- Semantic Search: By converting queries into vectors, search engines can match semantically similar queries to documents, even if they don’t share the exact wording.

- Personalization: Query vectors help recommend personalized content based on the user’s past behaviour or preferences, improving user experience.

How and Where Embeddings Make Markets Easier

Embeddings are numerical representations of data (words, sentences, images) in a high-dimensional vector space. These embeddings capture the essence and relationships of the data, making it easier to compare and analyze.

Market Applications

- E-commerce:

- Personalized Recommendations: By embedding product descriptions, customer preferences, and user behaviour, recommendation systems can suggest products with similar attributes.

- Example: “Similar products” for a red dress can be recommended by comparing the embedding vectors of different dresses.

2. Financial Services:

- Fraud Detection: Transaction data can be embedded to capture transaction patterns. Similar patterns are compared to identify potentially fraudulent activities.

- Example: Embedding vectors can compare transaction details, detecting anomalies that suggest fraud.

3. Healthcare:

- Patient Record Matching: Embedding techniques help match patient records with similar cases, improving diagnosis and treatment suggestions.

- Example: Matching symptoms and medical history vectors to find similar past cases for faster diagnosis.

4. Content Platforms:

- Content Recommendations: Platforms like YouTube or Medium use embeddings to recommend similar videos or articles, enhancing user engagement.

- Example: Embedding vectors can suggest videos related to a user’s past viewing behaviour.

Cosine Similarity in Vector Databases

Cosine Similarity is a metric used to measure the cosine of the angle between two vectors. It helps determine the similarity between vectors in a high-dimensional space by calculating how closely aligned the vectors are.

Formula

Applications in Vector Databases

- Semantic Search for Text and Documents: Cosine similarity helps measure how similar a query is to the documents in a database. The closer the cosine similarity score to 1, the more similar the documents are.

2. Matching Embeddings for Contextual Similarity: It is used to match vectors that represent similar concepts, even if they are expressed in different words.

Example: Explaining Cosine Similarity with Small Vectors

Let’s say we have two vectors representing product features:

- Vector A: [1, 2, 3]

- Vector B: [2, 3, 4]

Since the cosine similarity is close to 1, this indicates that the vectors (and hence the items they represent) are very similar.

Nearest Neighbor Search Process

This flowchart shows the process of receiving a user query, embedding it into a query vector, comparing it against database vectors, and returning the nearest neighbours either through approximate or exact methods.

Euclidean Distance in Vector Databases

Euclidean Distance measures the straight-line (or “as-the-crow-flies”) distance between two points in a high-dimensional space. It is the most common way of calculating the distance between two vectors.

Formula:

Comparison with Cosine Similarity

- Euclidean Distance: Measures the absolute distance between two vectors. It is most useful when you want to measure “how far apart” two points are in space, without caring about their direction.

- Cosine Similarity: Measures the cosine of the angle between two vectors. It is more about how similar the direction of the vectors is, rather than their magnitude.

When to Use Euclidean vs. Cosine Similarity

- Euclidean Distance is used when the actual distance between vectors matters, such as in cases where data is inherently spatial, like geospatial data.

- Cosine Similarity is used when the direction or orientation of vectors is more important, such as in text and document similarity where the magnitude (length) of the vector doesn’t matter as much as the angle between them.

Applications

- Geospatial Data: In applications like mapping or location-based services, Euclidean distance is crucial as it calculates the actual distance between points on Earth (latitude/longitude).

Example:

- Distance between two cities based on their coordinates.

The Benefits and Applications of Using Indexing in Vector Databases

Why Indexing Is Important

- Enhances Search Speed: By creating an index, the database can quickly locate and retrieve similar vectors without having to scan through the entire dataset.

- Scalability: As the number of vectors grows, indexing ensures that search times remain consistent and manageable.

- Reduces Computational Overhead: Instead of performing brute-force searches, the index allows more efficient querying and reduces the load on the system.

Types of Indexing Techniques

- Flat Indexing: A simple approach where all vectors are stored in a flat list. The downside is that searching can be slow with large datasets.

- Inverted File (IVF): Uses an inverted index to group similar vectors together, making searches faster by only checking relevant groups of vectors.

- Hierarchical Navigable Small World (HNSW): A graph-based indexing technique that organizes vectors hierarchically to allow fast approximate searches by navigating through a small-world graph.

- Product Quantization (PQ): Reduces the size of the dataset by quantizing vectors into smaller components, which reduces memory usage and speeds up the search.

Applications

- Large-Scale Recommendation Systems: Indexing allows for faster retrieval of similar items in recommendation engines.

- Real-Time Search in AI Applications: Real-time queries, such as search in voice assistants, benefit from fast indexing to provide near-instant results.

Indexing Improving Search Latency

This flowchart shows how an unindexed database leads to slower searches and high latency, while indexing techniques like HNSW or IVF result in faster searches with lower latency.

Most Popular Vector Databases

Milvus:

- Open-source and optimized for AI and deep learning applications.

- Key Features: Fast indexing, support for both CPU and GPU-based searches, integration with popular machine learning frameworks.

- Use Case: AI applications requiring large-scale vector search, like facial recognition or product recommendation.

Pinecone:

- Cloud-native, managed service focusing on scalability and ease of use.

- Key Features: Automatic scaling, fast similarity search, fully managed infrastructure.

- Use Case: Real-time recommendation engines, and large-scale search platforms.

Weaviate:

- Open-source, schema-based vector database with advanced integrations.

- Key Features: RESTful API, machine learning integration, semantic search.

- Use Case: Knowledge graphs, semantic search in large document repositories.

FAISS (Facebook AI Similarity Search):

- Developed by Facebook, widely used for similarity search in high-dimensional spaces.

- Key Features: Efficient clustering and searching of embeddings, support for both CPU and GPU searches.

- Use Case: Image search, recommendation systems.

ChromaDB:

- Lightweight vector database designed for personal use cases.

- Key Features: Simple setup, support for embedding-based search, low resource requirements.

- Use Case: Personal projects and small-scale applications.

Free Alternatives to Vector Databases

Options for Beginners and Small Projects

FAISS: Lightweight, local vector database for similarity search.

- Features: Fast indexing and searching, support for large datasets.

- Limitations: Not as scalable for cloud-based applications.

Annoy (from Spotify): A library for approximate nearest neighbour search.

- Features: Efficient for high-dimensional data, small memory footprint.

- Limitations: Less feature-rich than some commercial solutions.

HNSWlib: A highly efficient library for hierarchical indexing.

- Features: High performance for approximate nearest neighbour search.

- Limitations: Less documentation and community support than other libraries.

Milvus Community Edition: Open-source version of Milvus.

- Features: Similar to the paid version but limited in features and scalability.

- Limitations: Suitable for small-scale projects.

Comparison of Features, Limitations, and Ease of Use

Database Features Limitations Ease of Use FAISS Fast indexing, GPU support No cloud support, limited scalability Moderate Annoy Efficient, low memory usage Less flexible, smaller community Easy HNSWlib High-performance hierarchical indexing Less documentation Moderate Milvus Community Open-source, scalable Limited features in community edition Easy to use for beginners

Challenges and Future of Vector Databases

Challenges

Handling Massive Datasets Efficiently:

- As the number of vectors grows, managing storage and ensuring efficient search becomes more complex.

Ensuring Query Accuracy without Latency Issues:

- Balancing accuracy with speed in real-time applications can be difficult.

Integration with Other Systems and Platforms:

- Vector databases need to integrate seamlessly with other data systems, such as data lakes and machine learning pipelines.

Future Directions

Development of Multimodal Vector Databases:

- Combining data from multiple modalities (e.g., text + image) into a single vector database.

Reducing Storage and Computational Costs:

- Using advanced compression techniques to store embeddings more efficiently while reducing computation overhead.

Real-Time Updates to Embeddings:

- Allowing embeddings to be updated in real-time, particularly in dynamic applications such as recommendation engines.

Conclusion

In conclusion, vector databases are becoming increasingly crucial in AI-driven applications where similarity searches and personalized experiences are at the core. Their ability to efficiently manage and query large datasets with advanced indexing techniques and similarity metrics like Euclidean distance and cosine similarity makes them indispensable in fields such as e-commerce, healthcare, and content platforms. Future developments in multimodal data handling and real-time embedding updates will further enhance their capabilities.

FAQs

What is the difference between vector databases and traditional relational databases?

- Vector databases store high-dimensional data (embeddings), enabling similarity search, while relational databases store structured data with predefined schemas for CRUD operations.

Which similarity metric should I use for my application?

- Use cosine similarity for text and semantic search where direction matters, and Euclidean distance when absolute distance between data points is more important (e.g., geospatial data).

How do vector databases scale for billions of embeddings?

- Vector databases scale by using advanced indexing techniques (like HNSW or PQ) to ensure fast retrieval even as the dataset grows, along with optimized hardware for performance.